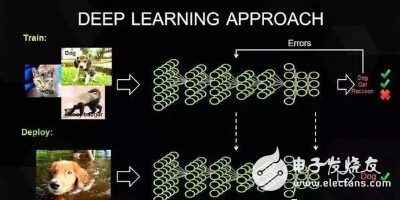

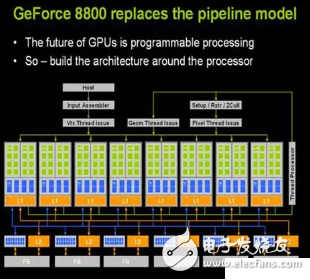

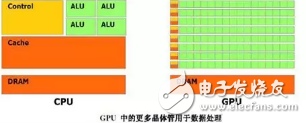

Almost all researchers in deep learning are using GPUs, but which ones are more optimistic about ASICs, FPGAs, and GPUs? Mainly to recognize the requirements of the deep learning hardware platform. Today, Luo Zhenyu’s New Year’s speech broke the circle of friends. However, when he talked about deep learning and GPU, it was really sad. The graphics card's processor is called the graphics processing unit (GPU), which is the "heart" of the graphics card, similar to the CPU, except that the GPU is designed to perform complex mathematical and geometric calculations that are required for graphics rendering. . To understand what hardware is needed for "deep learning," you must understand how deep learning works. First on the surface, we have a huge data set and selected a deep learning model. Each model has some internal parameters that need to be adjusted to learn the data. And this kind of parameter adjustment can actually be attributed to the optimization problem. When adjusting these parameters, it is equivalent to optimizing the specific constraints. Baidu's Silicon Valley Artificial Intelligence Lab (SVAIL) has proposed the DeepBench benchmark for deep learning hardware, which focuses on the hardware performance of basic computing rather than the performance of the learning model. This approach aims to find bottlenecks that make calculations slower or less efficient. Therefore, the focus is on designing an architecture that performs best for the basic operations of deep neural network training. So what are the basic operations? Current deep learning algorithms mainly include Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN). Based on these algorithms, DeepBench proposes the following four basic operations: Matrix Multiplication (Matrix MulTIplicaTIon) - Almost all deep learning models contain this operation, which is computationally intensive. ConvoluTIon - This is another commonly used operation that takes up most of the floating-point operations per second (floating point/second) in the model. Recurrent Layers - The feedback layer in the model, and is basically a combination of the first two operations. All Reduce - This is a sequence of operations that pass or parse the learned parameters before optimization. This is especially effective when performing synchronous optimizations on deep learning networks that are distributed across hardware, such as the AlphaGo example. In addition, deep learning hardware accelerators require data level and process parallelism, multi-threading and high memory bandwidth. In addition, due to the long training time of the data, the hardware architecture must have low power consumption. Therefore, Performance per Watt is one of the evaluation criteria for hardware architecture. When the GPU is processing graphics, it can execute parallel instructions from the original design, receive a set of polygon data from a GPU core, and complete all processing and output images completely independent. Since the GPU initially used a large number of execution units, these execution units can easily load parallel processing without the single-threaded processing of the CPU. In addition, modern GPUs can execute more single instructions per instruction cycle. Therefore, the GPU is more suitable than the CPU for the large number of matrix and convolution operations for deep learning. The application of deep learning is quite similar to its original application needs. GPU manufacturers have learned in depth and found new growth points. NVIDIA dominates the current deep learning market with its large-scale parallel GPU and dedicated GPU programming framework, CUDA. But more and more companies have developed accelerated hardware for deep learning, such as Google's TPU/Tensor Processing Unit, Intel's Xeon Phi Knight's Landing, and Qualcomm's neural network processor (NNU/ Neural Network Processor). Thanks to new technologies and GPU-filled computer data centers, deep learning has gained a huge range of possible applications. Much of the company's mission is to capture the time and computing resources used to explore these possibilities. This work has greatly expanded the design space. As far as scientific research is concerned, the area covered has expanded exponentially. This has also broken through the scope of image recognition, and has entered other tasks such as speech recognition and natural language understanding. Because of the growing number of coverage areas, Microsoft is also exploring the use of other dedicated processors while improving the computing power of its GPU clusters, including FPGAs, a chip that can be programmed for specific tasks such as deep learning. . And this work has already caused waves in the world of technology and artificial intelligence. Intel completed the largest acquisition in its history and acquired Altera, which focuses on FPGAs. The advantage of an FPGA is that it can be reassembled if the computer needs to be changed. However, the most versatile and mainstream solution is still to use GPUs to process large amounts of mathematical operations in parallel. Unsurprisingly, the main driver of the GPU solution is the market leader NVIDIA. NVIDIA flagship graphics card Pascal TItan X In fact, the renaissance of artificial neural networks after 2009 has been closely linked to GPUs – that year, several Stanford scholars showed the world that using GPUs can train deep neural networks in a reasonable amount of time. This directly led to the wave of GPU general-purpose computing. William J. Dally, head of NVIDIA's chief scientist and Stanford's VLSI architecture team, said: "Everyone in the industry is now doing deep learning. In this respect, the GPU has almost reached its best." Stainless Steel Wire,Thick Stainless Steel Wire,Stainless Steel Mig Wire,Stainless Wire Steel Band Roll ShenZhen Haofa Metal Precision Parts Technology Co., Ltd. , https://www.haofametals.com