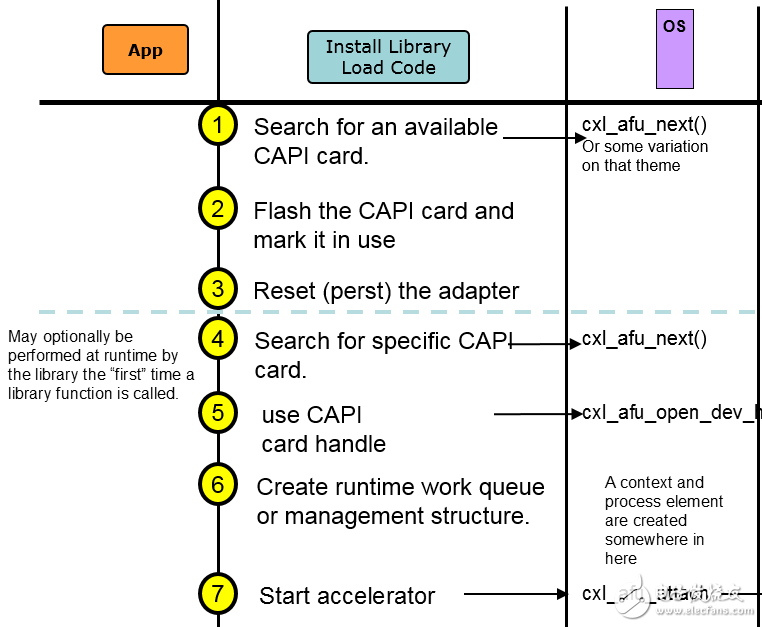

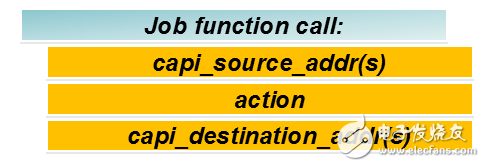

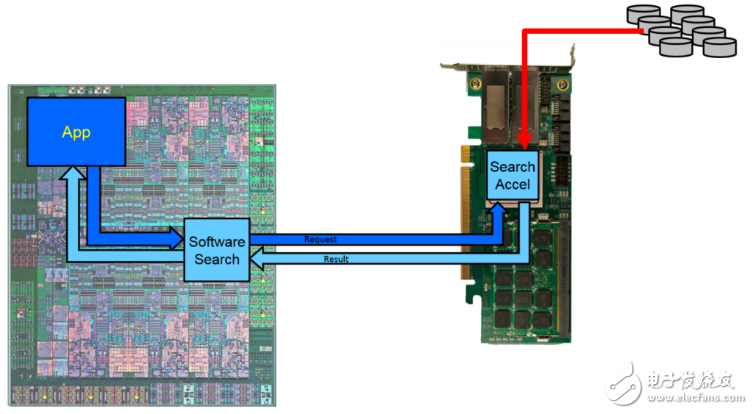

At the previous OpenPOWER Europe Summit, we launched a new framework designed to make it easier for developers to adopt CAPI to accelerate their application development. The CAPI Storage, Networking, and Analytical Programming Framework, or CAPI SNAP for short, was developed through collaboration between multiple OpenPOWER member companies and is now being tested internally with a number of pre-application partners. But what exactly is CAPI SNAP? In order to answer this question, I hope to give you an in-depth introduction to the operation of CAPI SNAP. The framework extends CAPI technology by simplifying the coding of APIs (calls to acceleration functions) and acceleration functions. With CAPI SNAP, FPGA acceleration can improve your application performance because computing resources are more closely related to massive amounts of data. ISVs are particularly concerned with the programming support capabilities of this framework. The framework API can support application call acceleration. Innovative FPGA framework logic can implement all computational engineering interface logic, data movement, caching, and prefetching—enabling programmers to focus solely on the development of accelerator functions. Without a framework, the application programmer must create a runtime acceleration library that performs the tasks shown in Figure 1. But now with CAPI SNAP, the application only needs the calling function as shown in Figure 2. This compact API has source data (address/location), specific acceleration operations that can be performed, and the destination (address/location) of the resulting data. Zooming in on the distance between the calculation and the data Figure 4 shows the way source data flows into the accelerator through the QSFP+ port, where the FPGA performs the search function. The framework then forwards the search results to system memory. The performance advantage of the framework has increased by a factor of two: The data in Table 1 compares the two methods, indicating a three-fold improvement in performance. By increasing the distance between the calculation and the data, the FPGA increases the input (or output) speed compared to moving the overall data to system memory. Only software Enter 100GB of data Two 100Gb/s ports: 4 seconds One PCI-E Gen3&TImes; 8 NIC: 12.5 seconds Perform a search <1 microsecond <100 microseconds Send results to system memory <400 nanoseconds 0 total time 4.0000014 seconds 12.50001 seconds The programming programming API that simplifies accelerated operation is not only the only simplified feature of CAPI SNAP. The framework also simplifies the programming of "opcodes" in FPGAs. The framework is able to retrieve source data (whether in system memory, storage, network, etc.) and send the results to the specified target. Programmers who use high-level languages ​​(such as C/C++ or Go) only need to focus on their data transformation or "operations." A framework-compatible compiler converts high-level languages ​​into Verilog and then synthesizes them using Xilinx's Vivado toolset. The open source version will include a variety of full-featured instance accelerators that provide users with the required starting point and full port declarations to receive source data and return target data.

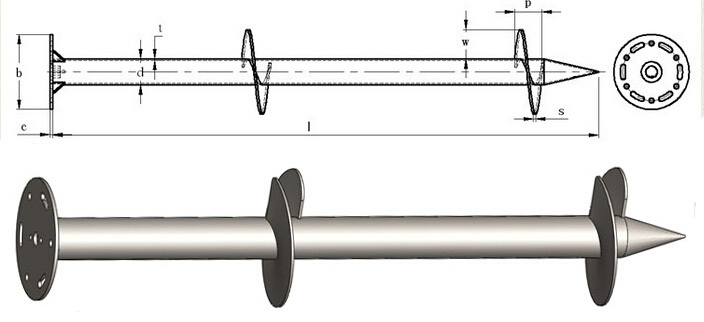

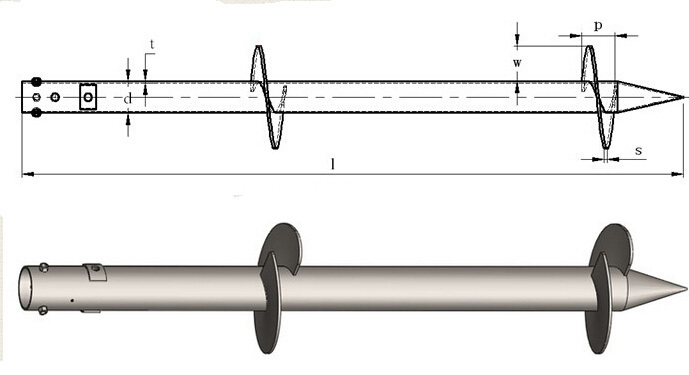

Hot dip galvanized Ground Screw Pile ,Screw Pile ,Helical Screw Pile foundation materials are carbon steel Q235 (S 235 ) or Q355 ( S350) ,with hot dip galvanized finish treatment .

Helical Screw Pile ,Screw Pile ,Helix Screw Pile with one ,two three or four pieces big blade .blade diameter can be 300mm,350mm,360mm 450mm or any size as customers' design.

Screw pile foundation ,ground pile suitable for soft soil condition .

Screw Pile tube diameter : 76m,89mm,102mm,114mm,219mm.

Helical Screw Pile including Flange ground screw pile ,Flat nut ground screw pile .

Usuage : Screw Pile used in many fields ,such as Solar mounting system ,PV mounting ,fence ,housing construction ,road signs ,highway guardrail ,flagpole & .

Screw Piles ,Helical Screw Pile ,Screw Pile Foundation,Screw Pile Cost BAODING JIMAOTONG IMPORT AND EXPORT CO., LTD , https://www.chinagroundscrew.com

Figure 2: Using CAPI SNAP Call Acceleration The framework is able to move data to the accelerator and store the results.

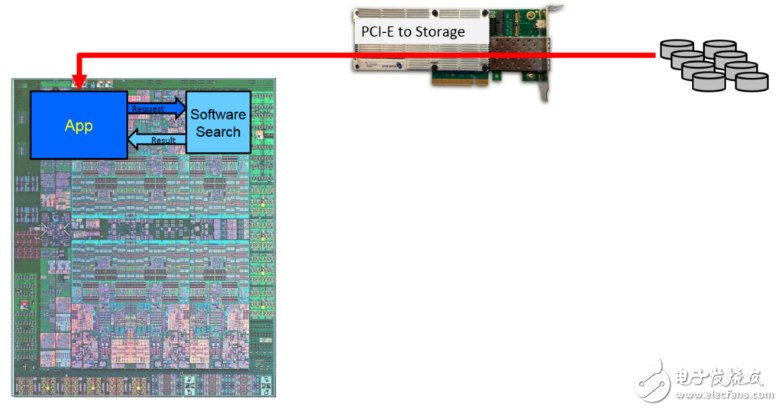

The simplicity of the API parameters combines outstanding and powerful features. The source and destination addresses are not just condensed system memory units, they are also attached storage, network or memory addresses. For example, if the frame card has additional storage, the application may obtain data for a large module (or multiple modules) through storage, perform operations such as searching, crossing, or merging data in the FPGA, and send the search results to the host system. The specified destination address of the memory. This approach has a greater performance advantage than the standard software approach shown in Figure 3.

Figure 3: Application search function in software (no acceleration framework)

Figure 4: Application with an accelerated framework search engine

1. By zooming in on the distance between the computation and the data (in this case, the search), the FPGA can increase the bandwidth of the access memory.

2. FPGA's accelerated search is faster than software search.

POWER+CAPI SNAP framework